Everything you need to know about Min-Max normalization: A Python tutorial

You have 2 free member-only stories left this month.

Everything you need to know about Min-Max normalization: A Python tutorial

In this post I explain what Min-Max scaling is, when to use it and how to implement it in Python using scikit-learn but also manually from scratch.

Introduction

This is my second post about the normalization techniques that are often used prior to machine learning (ML) model fitting. In my first post, I covered the Standardization technique using scikit-learn’s StandardScaler function. If you are not familiar with the standardization technique, you can learn the essentials in only 3 min by clicking here.

In the present post, I will explain the second most famous normalization method i.e. Min-Max Scaling using scikit-learn (function name: MinMaxScaler ).

Core of the method

Another way to normalize the input features/variables (apart from the standardization that scales the features so that they have μ=0and σ=1) is the Min-Max scaler. By doing so, all features will be transformed into the range [0,1] meaning that the minimum and maximum value of a feature/variable is going to be 0 and 1, respectively.

Why to normalize prior to model fitting?

The main idea behind normalization/standardization is always the same. Variables that are measured at different scales do not contribute equally to the model fitting & model learned function and might end up creating a bias. Thus, to deal with this potential problem feature-wise normalization such as MinMax Scaling is usually used prior to model fitting.

This can be very useful for some ML models like the Multi-layer Perceptrons (MLP), where the back-propagation can be more stable and even faster when input features are min-max scaled (or in general scaled) compared to using the original unscaled data.

Note: Tree-based models are usually not dependent on scaling, but non-tree models models such as SVM, LDA etc. are often hugely dependent on it.

The mathematical formulation

Python working example

Here we will use the famous iris dataset that is available through scikit-learn.

Reminder: scikit-learn functions expect as input a numpy array X with dimension [samples, features/variables] .

from sklearn.datasets import load_iris

from sklearn.preprocessing import MinMaxScaler

import numpy as np# use the iris dataset

X, y = load_iris(return_X_y=True)

print(X.shape)

# (150, 4) # 150 samples (rows) with 4 features/variables (columns)# build the scaler model

scaler = MinMaxScaler()# fit using the train set

scaler.fit(X)# transform the test test

X_scaled = scaler.transform(X)# Verify minimum value of all features

X_scaled.min(axis=0)

# array([0., 0., 0., 0.])# Verify maximum value of all features

X_scaled.max(axis=0)

# array([1., 1., 1., 1.])# Manually normalise without using scikit-learn

X_manual_scaled = (X — X.min(axis=0)) / (X.max(axis=0) — X.min(axis=0))# Verify manually VS scikit-learn estimation

print(np.allclose(X_scaled, X_manual_scaled))

#True

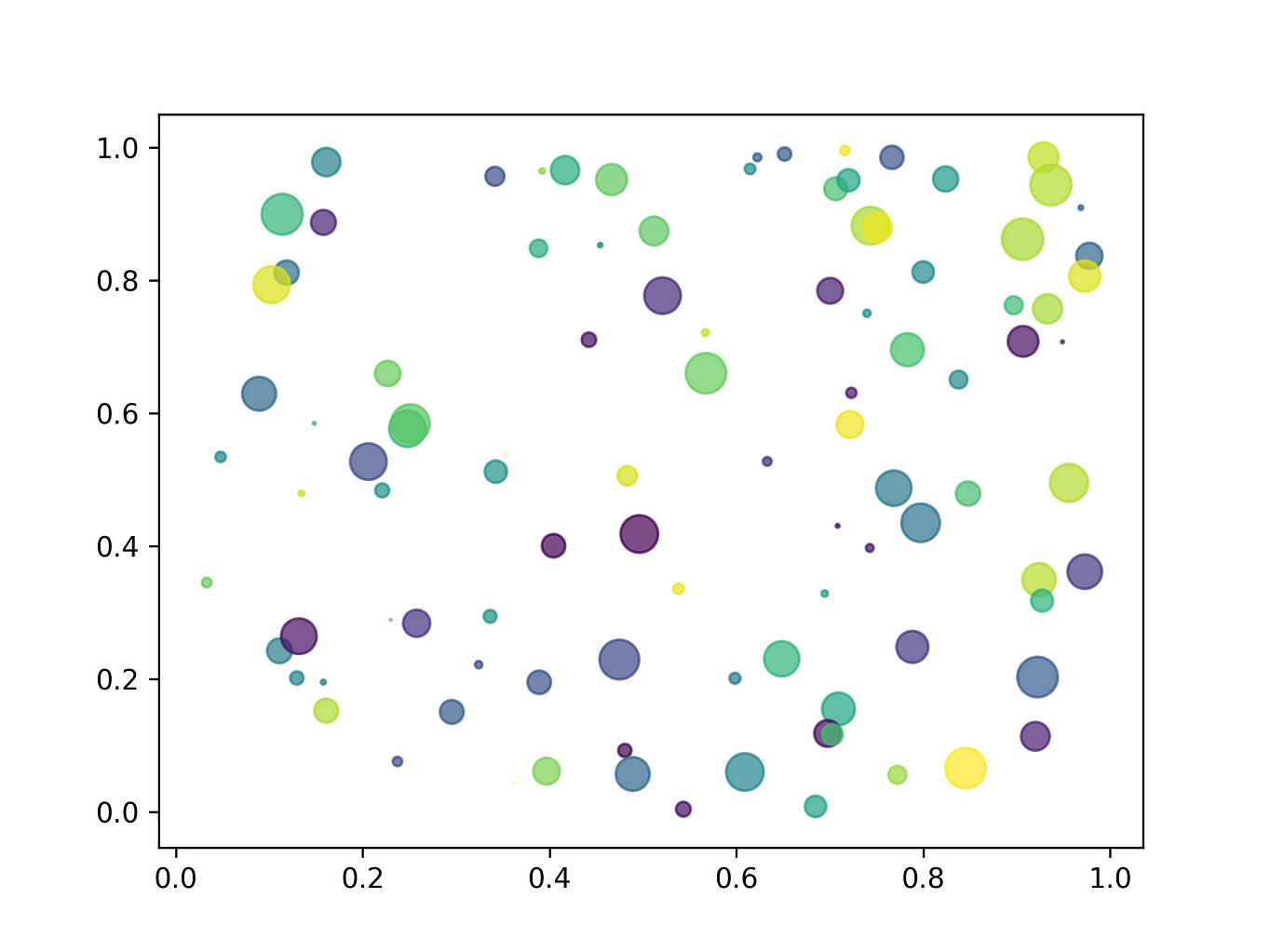

The effect of the transform in a visual example

import matplotlib.pyplot as pltfig, axes = plt.subplots(1,2)axes[0].scatter(X[:,0], X[:,1], c=y)

axes[0].set_title("Original data")axes[1].scatter(X_scaled[:,0], X_scaled[:,1], c=y)

axes[1].set_title("MinMax scaled data")plt.show()

It is obvious that the values of the features are within the range [0,1] following the Min-Max scaling (right plot).

Another visual example from scikit-learn website

Summary

- One important thing to keep in mind when using the MinMax Scaling is that it is highly influenced by the maximum and minimum values in our data so if our data contains outliers it is going to be biased.

MinMaxScalerrescales the data set such that all feature values are in the range [0, 1]. This is done feature-wise in an independent way.- The

MinMaxScalerscaling might compress all inliers in a narrow range.

How to deal with outliers

- Manual way (not recommended): Visually inspect the data and remove outliers using outlier removal statistical methods.

- Recommended way: Use the

RobustScalerthat will just scale the features but in this case using statistics that are robust to outliers. This scaler removes the median and scales the data according to the quantile range (defaults to IQR: Interquartile Range). The IQR is the range between the 1st quartile (25th quantile) and the 3rd quartile (75th quantile).

That’s all for today! Hope you liked this first post! Next story coming next week. Stay tuned & safe.

- My mailing list in just 5 seconds: https://seralouk.medium.com/subscribe

- Become a member and support me:https://seralouk.medium.com/membership

Latest Posts

Stay tuned & support me

If you liked and found this article useful, follow me and applaud my story to support me!

Resources

See all scikit-learn normalization methods side-by-side here: https://scikit-learn.org/stable/auto_examples/preprocessing/plot_all_scaling.html

Get in touch with me

- LinkedIn: https://www.linkedin.com/in/serafeim-loukas/

- ResearchGate: https://www.researchgate.net/profile/Serafeim_Loukas

- EPFL profile: https://people.epfl.ch/serafeim.loukas

- Stack Overflow: https://stackoverflow.com/users/5025009/seralouk

Research Scientist at University of Geneva & University Hospital of Bern. PhD, MSc, M.Eng.

Sign up for The Variable

By Towards Data Science

Every Thursday, the Variable delivers the very best of Towards Data Science: from hands-on tutorials and cutting-edge research to original features you don't want to miss. Take a look.

Your home for data science. A Medium publication sharing concepts, ideas and codes.

Save your friends from dependency hell

At the end of my first year at as as software engineer at Amazon, my manager taught me a valuable lesson. Multiplying the effectiveness of my team is as important as my individual contributions.

I took this lesson with me to the modelling team at Improbable, where I focussed on…

Share your ideas with millions of readers.

INSIDE AI NLP365

NLP Papers Summary is a series where I summarise the key takeaways of NLP research papers

Project #NLP365 (+1) is where I document my NLP learning journey every single day in 2020. Feel free to check out what I have been learning over the last 305 days here. At the end of this article, you can find previous papers summary grouped by NLP areas and you…

Introduction

We are all aware of the disastrous start of 2020, thanks to the Coronavirus pandemic. Life as we know it has come to a halt. Research has consistently shown that basic hygiene, such as hand washing and covering your mouth and nose while sneezing or coughing goes a long way…

A detailed step-by-step guide to creating a GIF with big data in Python using Dask and Datashader libraries.

Hello! This article will walk you through step-by-step to creating a GIF to visualise big data in Python. GIFs are a great way to show changes over time, especially with large datasets. This article uses the New York City construction permits from the past 30 years, published by the Department…

Speed up development and testing of structured streaming pipelines using HTTP REST endpoint as a streaming source.

Writing distributed applications could be a time-consuming process. While running simple spark.range( 0, 10 ).reduce( _ + _ ) ( A “Hello World” example of Spark )

code on your local machine is easy enough, it eventually gets

complicated as you come across more complex real-world use cases,

especially in…

More From Medium

Please help spread the word!

Comments

Post a Comment